In 1998, the Mars Orbiter collapsed during its entry into the Martian atmosphere.

The cause? The development team used both metric and imperial (or English) units, causing a miscalculation.

The cost? $193 million.

In 1982, a radiation-therapy machine called Therac-25 mistakenly gave patients a fatal dose of radiation.

The cause? An arithmetic error in the machine’s detection systems.

The cost? Six dead patients.

The bottom line is this: software bugs are costly, both in terms of money and human lives.

The only way to avoid them is to have a thorough software testing regimen in place.

However, with dozens of testing techniques and platforms available to you, which options make the most sense for your project?

What Is Software Testing and Why Is It Important?

Software testing is the process of ensuring that an app, system, or software aligns as closely to the project requirements as possible.

To do this, testers check for bugs, errors, usability issues, and functionalities that don’t address the end user’s needs.

Testing operates on the assumption that software projects can never be 100% bug-free, no matter how big the company or talented the development team is.

Just look at Google’s Nest thermostat, which suffered a catastrophic glitch in 2016 that left millions of homes without heating.

Remember, we’re talking about the biggest tech company with the brightest minds on the planet, and even they’re not immune.

Issues like these are pervasive and all too common, hence the need to have a robust testing process to catch errors as early as possible.

Still, software testing doesn’t exist just to prevent the negative. It also has plenty of positive benefits.

One of the most important is lowering project costs.

The fact is that it’s much cheaper to fix a problem during the earlier development phases than it is during the later stages. AT&T, for instance, had a system upgrade mishap due to a single line of erroneous code.

The resulting damage cost $60 million.

Proper software testing also leads to safer and secure applications. This is especially crucial for mission-critical industries like finance and health.

For such a simple goal, testing is a very complex process. There are countless methodologies and techniques to consider. Part of the challenge is deciding which one is best for your project.

Before we delve into that further, let’s first discuss two major approaches to testing: manual and automated.

Software Testing Overview: Manual and Automated

Software testing can either be manual or automated.

Manual testing is done by humans without the aid of automation. Here, testers use test cases to know which inputs and actions to take and the specific results to consider.

Because it involves human judgment, manual testing can detect even the most subtle bugs. However, it can be time-consuming, repetitive, and error-prone.

On the other hand, automated testing lets the computer execute test cases autonomously, using test scripts.

As a result, it can test software much faster and isn’t susceptible to lapses due to human error.

So, the question is: which one should you go for?

The quick answer is – both. Here’s why.

A complete testing regimen requires both functional and non-functional testing.

Functional testing deals with the objective aspects of the software. For example, checking if the login page of an app works falls under functional testing.

Given a set of inputs, the feature either works or it doesn’t.

Because of its predictability and “black or white” nature, functional testing works great for both manual and automated testing.

Non-functional testing deals with the more subjective areas of your software. This often involves usability, reliability, and security, which are very difficult or impossible to automate.

After all, only a human can tell if the user interface is intuitive or if the color scheme works well.

Therefore, you need both manual and automated approaches to cover all bases.

With that out of the way, let’s go into the many different types of testing you’ll encounter.

Different Types of Software Testing

The main thing to understand about software testing is that there’s no one-size-fits-all solution.

Instead, different techniques have their uses, pros, and cons, and you’ll often find yourself using most or all of the approaches on a single project.

Unit Testing

Unit testing is a basic approach that verifies the individual components of your software.

It’s a form of white box testing, which requires the tester to know the internal code and structure for proper evaluation.

A unit is any self-contained component of your software that functions independently without connecting to any outside resource. This is often a code block, function, or class, but it can also be an entire module or feature.

Therefore, you get free rein in how granular you want to be during unit testing.

Unit testing is the first testing process used by developers to verify the integrity of their code and catch the most fundamental bugs.

Tests are often manual but take particularly well to automation as well.

The importance of unit testing can never be understated. It ensures each piece works flawlessly before combining with other components, which can introduce brand new complications.

You can use a variety of tools for unit testing, including Jasmine and VectorCAST.

Integration Testing

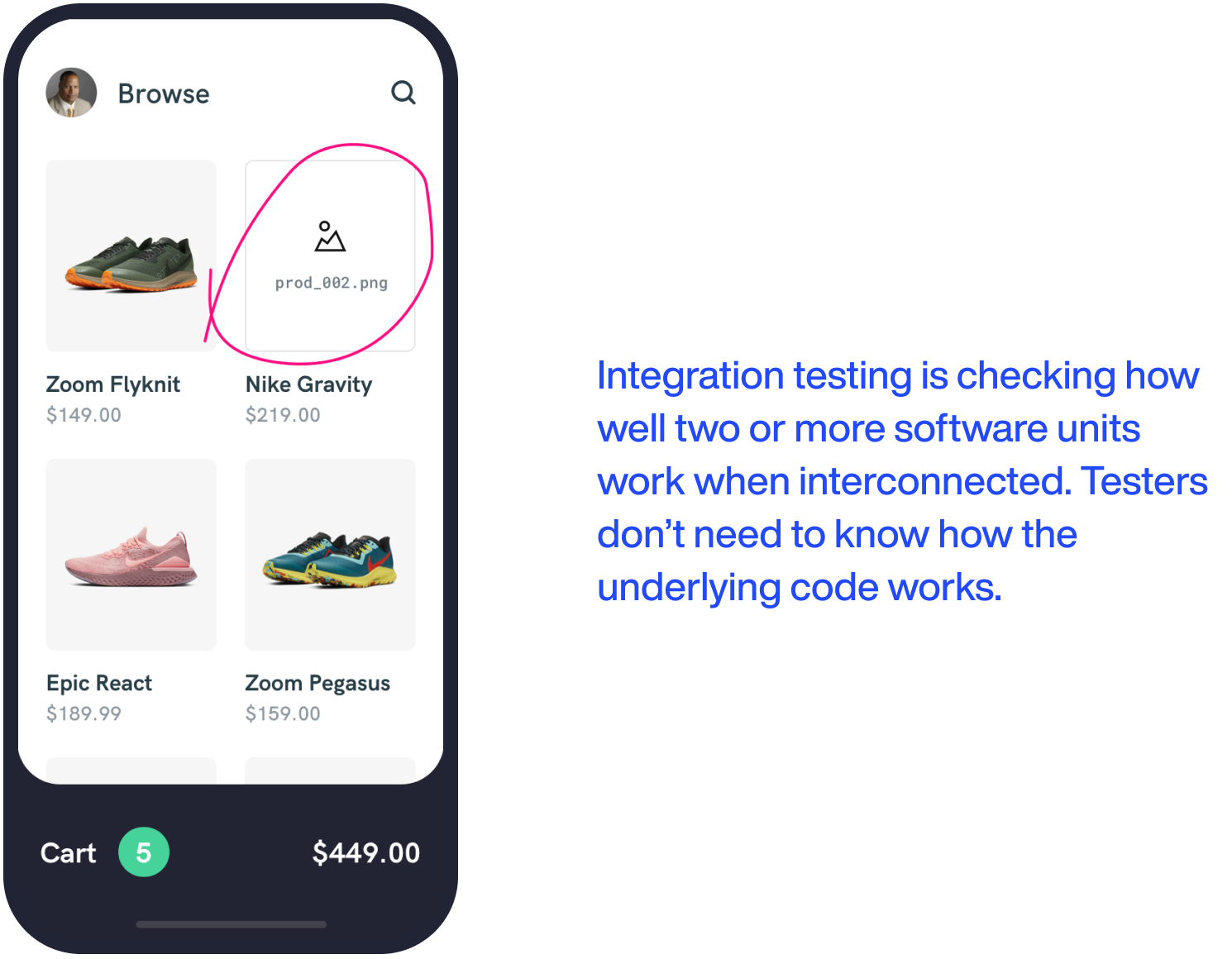

The next logical step from unit testing is integration testing. Here, you evaluate how well two or more software units work when interconnected.

For example, let’s say you’ve already tested your photo-sharing app’s Camera and Share features, and each works bug-free.

The next step is to check whether you can take a photo and share it online. That’s integration testing.

In contrast to unit testing, integration testing is often carried out as a black box test. Testers don’t need to know how the underlying code works to conduct a thorough evaluation.

Integration testing is vital because no matter how well-written each software module is, it’s hard to predict how they’ll behave once joined.

This problem is significantly heightened when there are different teams or programmers developing the individual components.

Dealing with sudden changes in requirements is also a strength of integration testing.

Often, new features or functions get added in the middle of development.

A combination of unit and integration testing ensures that the new module works and that adding it in won’t disrupt the functionality of the rest of the system.

Smoke Testing

Smoke testing is a preliminary or “gatekeeper” procedure that establishes whether the software is ready for further testing or not.

Thus, it often precedes other more rigorous tests like integration and acceptance testing.

This type of testing got its name from a hardware testing procedure, which checked whether a device caught fire and smoked when plugged in for the first time.

If that happened, it indicated a fundamental issue that didn’t warrant any further testing.

In software, it works in much the same way.

Let’s take our hypothetical photo-sharing app as an example.

Part of smoke testing might be to verify a basic feature—can a user log in, take photos, and share them with other users?

If the app can’t even do this without errors, any further testing will only waste time. In this case, the software is sent back for fixes before going further into the QA process.

Speed is the top priority with smoke testing, as you want to uncover significant problems as quickly as possible with minimal effort.

Thus, smoke test cases only cover broad functionalities and don’t go into the software details.

Generally, smoke testing is done manually. However, automation can be used if it’s done often enough (such as when releasing multiple test builds).

User Acceptance Testing

User acceptance testing (UAT) is the last and most critical testing phase before the software is deemed ready for release.

The idea is that if end-users accept your software, then you know it’s stable enough for deployment.

If it’s the first time you’ve heard of UAT, you might be more familiar with its other name: beta testing.

Project teams will often recruit volunteers as beta testers or have a beta test build of the software available for a limited time, as is the case with games.

The main goal of UAT is to verify if the software is a good fit for the end-user.

Is it solving their problems? Is it intuitive to use? Does it have all the features they need? Are there still bugs left?

UAT is critical because even if your software is flawless from a technical standpoint (i.e., all features are working bug-free and as intended), it will still fail if it doesn’t solve the end user’s problems.

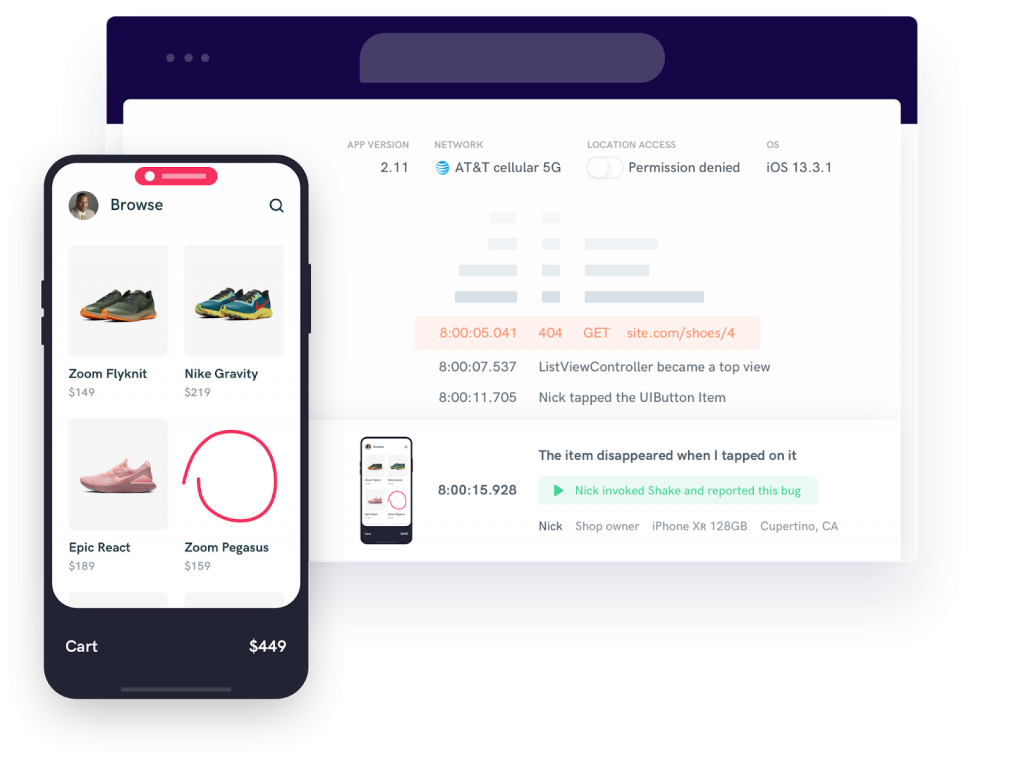

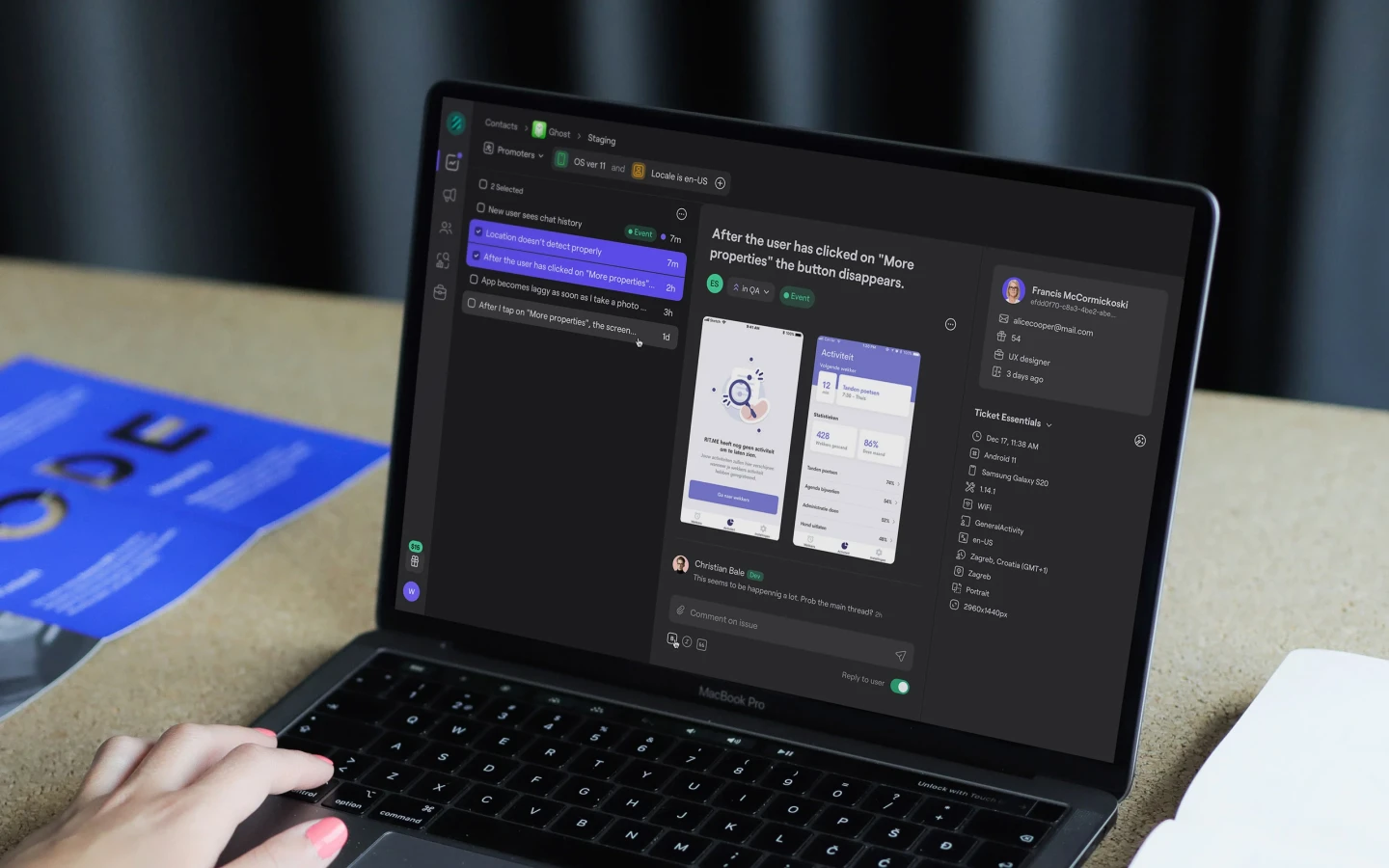

Shake is a great option for catching such bugs and preventing them from reaching end users.

In many ways, the test cases in UAT also deal with the exact technical, functional, and business requirements as the previous test phases.

Still, the difference and most significant benefit here is that you’ll be doing it from the end user’s perspective.

This gives you fresh new insights or reveals errors that would’ve been impossible for you to see as a developer.

Performance Testing

Performance testing is the general umbrella term for tests that measure how well certain software performs.

These include factors involving the system’s reliability, stability, load capacity, and response speed.

These types of tests are crucial because you want to match the performance of your software to that of real-world conditions.

Without these tests, you risk delivering a subpar experience to users due to slowdowns.

Worse, it can lead to unexpected crashes and downtimes, which is expensive for businesses. Just ask Google—a mere 5-minute outage in 2013 cost them an estimated $500,000.

Performance testing is a broad topic, and there are many different sub-tests you can do for specific metrics.

Some of the more important ones worth mentioning include stress testing, load testing, configuration testing, and scalability testing.

We’ll cover these next.

Scalability Testing

Scalability testing measures how well the software performs when scaled up. Scaling can be due to added users, transactions, network connections, or other loads on the system.

Scalability testing is a proactive step you do when you plan to scale your software and want to ensure that your system can cope.

For example, you estimate that a Black Friday promotion campaign will bring 50% more traffic to your e-commerce app. So, you do a scalability test to ensure your system can still run smoothly in that condition.

The test results might tell you that you need to add more bandwidth or server capacity to handle the expected load.

There are many ways to perform scalability testing, which can be done at the hardware or software level. All of them involve repeating a test case with various loads and verifying how the system responds.

The most common and easiest-to-measure metric for scalability testing is the response time, or how fast the software responds to user input.

However, some testers also use throughput, which is the number of user requests that the software can process in a given timeframe.

Scalability testing is often confused with load testing, which is another type of performance test.

While both measure similar metrics, they have enough key differences that make them distinct.

Let’s clear up these differences a bit more.

Load Testing

Similar to scalability testing, load testing also verifies the performance of a system given a particular load.

The difference here is that load testing focuses on the failure point or the load volume that will crash the system.

Let’s continue with our e-commerce app example above.

For instance, let’s say that scalability testing told you that your website could handle the expected traffic of 100,000 users due to Black Friday.

However, with load testing, you might discover that the point where your app will start to crash is 150,000 users.

Load testing is generally used to spot performance bottlenecks in your software to ensure its reliability when faced with real-world conditions.

The insights you gain will tell you the fixes you need to do, whether to fine-tune the coding or the underlying hardware.

Stress Testing

Stress testing is an approach used to verify a system’s reliability by trying to break it.

Like load testing, stress testing will subject the software to extreme loads and demands.

The difference here is that stress testing is concerned with how the system behaves during a crash instead of when it will happen.

Specifically, stress testing validates how robust a system’s error handling and checking capabilities are when dealing with abnormal or unexpected situations.

The most important attribute this test reveals is recoverability, or the software’s ability to bounce back from a catastrophic error. For fixing app crashes faster than ever, try Shake’s Crash reporting product.

Stress testing deals with extreme situations that are unlikely to happen but should be tested anyways because they just might.

For example, look at what happened with the UK fashion label Goat when a famous royal caused a massive increase in web traffic that crashed their website.

It’s also worth mentioning that most performance-based test tools can do any of the previous tests we discussed. These include Apache JMeter, LoadNinja, and BlazeMeter.

Configuration Testing

Configuration testing is a distinct type of performance testing that identifies which hardware/software/operating system combination works best with your software.

This is a common practice for high-performance applications, such as video games and graphics editing suites.

You’ll often see a list of recommended system requirements that can run the software without any problems. This information is the result of configuration testing.

Running this type of test involves executing test cases under multiple configurations. For instance, you’ll need to test how well your app works in both Windows and Mac OS.

Or, more commonly, you’ll need to ensure that a website looks consistent across browsers.

Owing to the sheer number of possible hardware and platform combinations, it’s impossible and impractical to run a test for all of them.

Instead, you’ll prioritize which configurations you’ll test based on the requirements of the project.

Usability Testing

Usability testing is a non-functional test that determines how intuitive or user-friendly a software is.

It’s a distinct test because, compared to other types, it cares more about how easy a software is to use, and not whether it works or not.

Verifying for usability is crucial for any software product, but even more so if it’s intended for public use.

That’s because it doesn’t matter how exceptional a product’s feature is; if it’s a pain to use, then users won’t bother.

Plus, usability is one of the most challenging things to get right. It involves an iterative design approach that requires plenty of user data.

And where do you get such data? Usability testing.

One of the best and primary approaches for usability testing is with a prototype.

Because testers evaluate what your app will eventually look like, it gives you the best insights on your user interface (UI).

Feature Testing

Whenever you add a new feature, you need to test for two things. One, is the feature working as intended and fulfilling requirements?

Two, is it integrating well with the software and not breaking any existing functionalities?

Collectively, these two aspects are what feature testing is all about.

The main goal of feature testing is to ensure that the addition of the new feature is not introducing errors of its own.

The more complex the software is, the more vital feature testing is when updating your build.

Feature testing has many approaches.

In fact, many of the previous techniques we’ve covered (such as unit and integration testing) can be used successfully with it. It all depends on what aspect of a feature you’re evaluating.

For instance, if you want to get feedback from end-users regarding a feature, you can do something similar to an A/B test.

Have beta testers analyze two versions, one with the feature and one without, and see what they think about it.

Feature testing often goes hand-in-hand with regression testing, which we’ll discuss next.

Regression Testing

A regression test verifies whether a software build still performs as it did before introducing a bug fix, update, or new feature.

The goal is to prevent the software from regressing to a previous, less ideal state.

This type of test is crucial because any code changes to the existing system can be unpredictable.

Unfortunately, the presumable lack of regression testing is precisely what happened with the financial firm Knight Capital Group.

In 2012, a software glitch occurred after a major system update, which cost the company $460 million in just 45 minutes.

Regression testing uses test cases from previous stable releases to verify if the current build will still pass.

To save time, you often only need to test parts of the software affected by the changes (called regression test selection).

However, regression testing on the entire system is preferred in some cases, such as when porting to a new platform or after a significant update.

Regression testing takes exceptionally well to automation, especially when you’re releasing software builds regularly.

Some great automated regression testing tools include Katalon and Selenium for web and Appium for mobile.

This Is Just the Beginning…

As you can see, software testing is an amazingly deep and fascinating subject. A short article like this simply can’t do it justice.

Still, we hope this article has given you an idea of which testing techniques work best in your project.

The truth is that testing is a complex but fundamental part of the software development life cycle.

Therefore, you need to give it the attention and care it deserves to help you develop high-quality, bug-free applications.

If you’d like to know more, check out our article discussing manual and automated testing approaches.